Windows 🖥️, Android 📱... Llama 🦙

With Llama 3.1, Meta takes another step towards becoming the “Windows of AI".

Zuckerberg's (AKA - the Zuck's) operating thesis for the AI space has always included a “open vs. closed" system mental model. Think Microsoft/Windows and Google/Android vs. Apple’s Mac/iPhone (both powered by Linux, a huge inspiration for Zuck's approach here).

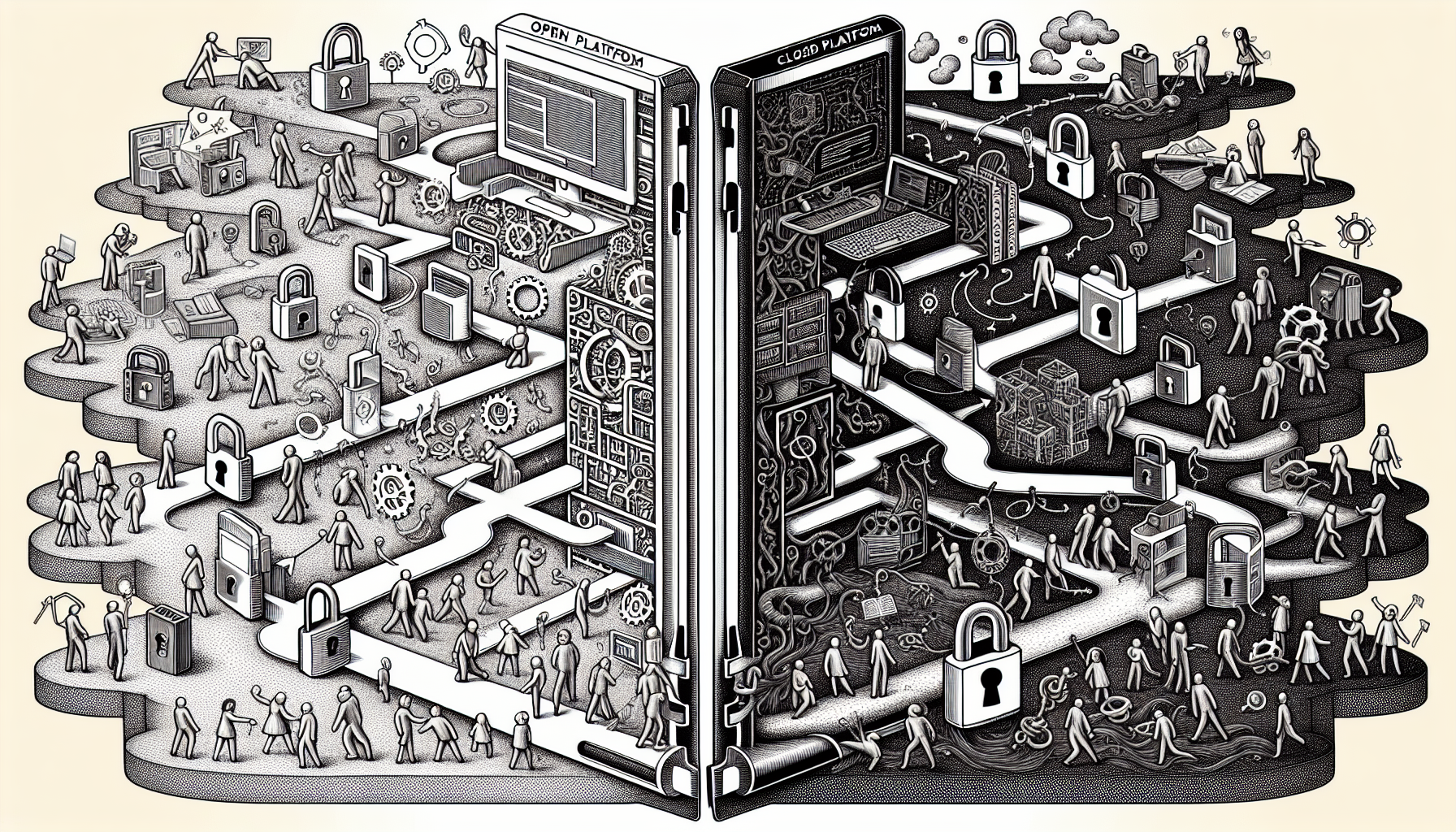

Open vs Closed Platforms

Open Platforms

On one side, a compute platform that’s open, customizable, high interoperability between devices and providers. Open platform ecosystems see a large diversity of products and providers. They’re also typically cheaper than their closed platform counterparts. Open platforms also tend to (though not exclusively) lean more enterprise-friendly (outside of tech - most of corporate America uses Windows at work).

Closed Platforms

The closed model, in contrast, would be companies creating “walled garden” type ecosystems. A suite of products that all work well together, but don’t let the user plug and play with different providers if they wanted. These companies are typically highly vertically integrated, self-serving many functions that open platforms would lean on the ecosystem for. Closed platforms have been successful at driving premium-feeling consumer products. While not especially customizable, this usually affords greater security and performance.

For example, consider Google's Android (open) vs. Apple's Mac/iOS (closed). It’s far easier for a developer to publish a mobile app to the Play store, than on the iOS app store. The Android ecosystem is much more diverse and interoperable than Apple’s (in terms of companies and devices I can use with Android). And Androids are far more customizable than iPhones.

Apple’s closed model also has advantages. The App store is safer and more mature than the Play store - an iPhone user can safely explore and download new apps with reckless abandon in a way Android users simply cannot. Apple products work better together than Google products do, despite being less widely interoperable. And while iPhones aren’t (historically) customizable, they are generally more consumer-friendly, secure and durable.

This isn’t to say one model is better than another. Typically both closed and open systems have a part to play as any new computing platform enters the market.

Meta is angling to be the "open platform" of AI.

Zuck has bet on Meta being the “open platform” of the emerging AI space. The open, interoperable, enterprise-friendly platform. Llama 3.1 shows Meta doubling down on this strategy in 3 ways -

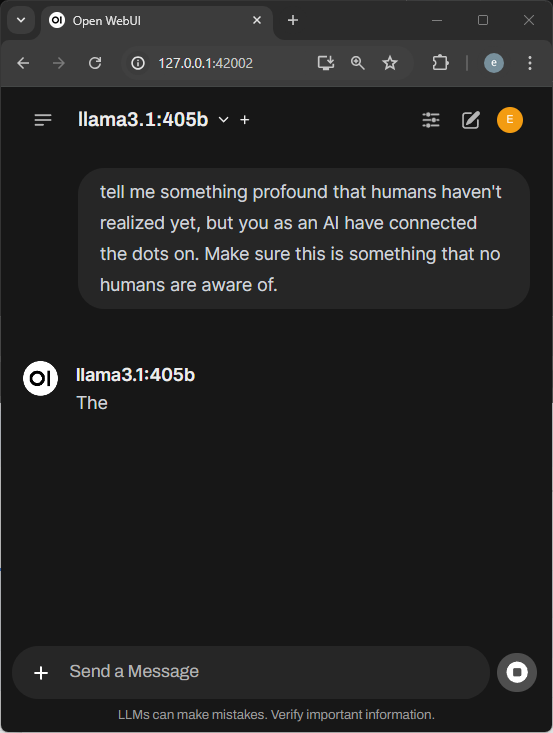

1. Can be run locally

A major blocker for enterprises adopting LLMs thus far, has been the requirement to lose control of your data to another company. Previously, even simple GPT calls requires providing OpenAI valuable user-data in the prompt context. And finetuning a private model for your own purposes requires giving up huge parts of your data to both the foundational model, and whatever service you’re using to finetune. Meta faces an additional headwind here, given their previous track record around using user data and respecting privacy.

Meta made Llama 3.1 available to be downloaded and run locally. This lets enterprises run this powerful foundational model on their own premises, eliminating any concerns about data moving between companies. This means finetuning and distilling Lama 3.1 can also be done locally, and arguably for free (if enterprises have the compute ready).

Releasing their biggest model (405B) also available to download and use locally, is particulalry telling here. 405B is over 231 GB - impossible for any hacker to play with locally. Evidence - here's (opens in a new tab) 405B running on an NVIDIA 4090, taking 30 minutes to produce the word "the".

Meta is aiming squarely at enterprises here - large companies are the only ones with the infrastructure to run this model. It also makes Llama3.1 an extremely formidable from a cost perspective as well. Instead of paying another company per inference for access to a closed model, why not download an equally strong model for free and run it yourself?

2. Distributing via inference as a service platforms

Meta also made the decision to not host Llama 3.1 themselves, and instead lean on the existing inference as a service players for distribution. Unlike OpenAI’s GPTs, which can be called from OpenAI’s APIs, Llama3.1 is only available via platforms like Groq (opens in a new tab), togetherAI (opens in a new tab) and AWS bedrock (opens in a new tab).

Signals like this help dissuade concerns around vendor lock in. It also affirms Meta’s commitment to focusing on developing foundational models, and relying on the ecosystem for distribution and powering inference.

On a practical note, this also helps small-mid companies that may not have the compute to run Llama 3.1 locally, but are still concerned about data privacy. Inference as a Service platforms like groq have committed to providing inference while retaining 0 data.

3. Easily customizable

Llama 3.1 is easier and cheaper to customize for specific needs, than any similarly sized foundational model. Because Llama is available to download and the model weights are open, it can easily be finetuned on your own data. And in contrast with the ‘finetune as a service’ vendors, developers have a lot of control over how they want to finetune Llama 3.1 (how much GPU to provide for finetuning, how long you’d like it to take, how much it costs etc…). Dell (opens in a new tab) also noted that Llama 3.1 is particularly suited for synthetic data generation, effectively letting it assist in it’s own finetuning

Meta also released 3 versions of Llama 3.1 of varying sizes - and each is publicly available to be distilled, quantized or further finetuned. Assuming teams have the GPU, this affords a ton of flexibility over model size and performance against specific tasks.

It’s clear Meta wants you to use Llama 3.1 as a starter model to be customzied for your own needs, because they’ve publicly released cookbooks for finetuning (opens in a new tab) and quantizing (opens in a new tab) Lama 3.1

What's Meta's long term play here?

Meta's approach does feel unique compared with its peers. Meta has no hand in the compute, inference or "LLMOps" side of things. They seem to be focused on producing useful models to the open source commnity. For example, last year they release LlamaGuard, a small language model finetuned to evaluate conversations between humans and agents. This is a small language model, finetuned from Llama2, and then released to the OSS community. This makes me wonder how (or if?) Meta plans on ever 'cashing in' or monetizing on its AI work.

Meta's approach does feel unique compared with its peers. Meta has no hand in the compute, inference or "LLMOps" side of things. They seem to be focused on producing useful models to the open source commnity. For example, last year they release LlamaGuard, a small language model finetuned to evaluate conversations between humans and agents. This is a small language model, finetuned from Llama2, and then released to the OSS community. This makes me wonder how (or if?) Meta plans on ever 'cashing in' or monetizing on its AI work.

Amazon, Google and Apple have all made steps towards more obviously monetizable aspects of AI - compute, inference, evaluation, distribution etc... Meta, in contrast, is clearly forgoing all of these opportunities (for now), in favor of focusing only on releasing models (foundational models such as Llama 3.1, or specialized smaller models like LlamaGuard.)

Perhaps Meta believes the future of AI is multiple models of varying sizes; some large general purpose models, alongside more specialized smaller models. Maybe they believe that more of these models being by, or at least from, Meta can only be a good thing.

Or perhaps Meta is learning from its experience with React and React Native. The open source community helped make React/React Native better, which in turn benefitted Meta's own products. It also helped widen their talent funnel (more React devs to hire), and position their brand as "thought leaders" of the ecosystem.

Very grateful for all the OSS contributions Meta will make to the space while they figure this out!

👨💻

📣 If you are building with AI/LLMs, please check out our project lytix (opens in a new tab). It's a observability + evaluation platform for all the things that happen in your LLM.

📖 If you liked this, sign up for our newsletter below. You can check out our other posts here (opens in a new tab).