Using lytix to observe lytix

We've added (opens in a new tab) a but of new integrations to lytix. But making sure each one works as expected has been been a challenge. Especially as we add new features that we want to work across all our previous integrations.

Integration tests were the answer for us as it gave us the best coverage for the amount of work. But how often can I run integration tests? Ideally I'd like to run them every minute of every day, but that might be cost prohibitive.

This post will go over how internally at lytix we use lytix to answer that question.

(For those who are unfamiliar, lytix is an observability + evaluation middleware for LLM providers)

🧪 Integration Tests

To give some context I want to share what I mean by integration tests. Essentially this boils down to actually calling the provider (OpenAI, Anthropic, Google) and verifying that we get a response along with the expected data available in lytix.

At the core of it, we loop through each model + combination of provider, but it looks something like this:

const uniqueSessionId = crypto.randomUUID()

const workflowName = 'test-workflow'

const metadataKey = 'test-metadata-key'

const client = new OpenAI({

// @see https://docs.lytix.co/Quickstart/openai-integration#update-your-openai-sdk

})

const response = await client.chat.completions.create({

model: 'gpt-4o-mini',

messages: [

{

role: 'user',

content: `Hello world ${uniqueSessionId}`

}

]

...

})Now I can now just go lookup an event with that uniqueSessionId and verify that the data is what I expect.

💸 Cost

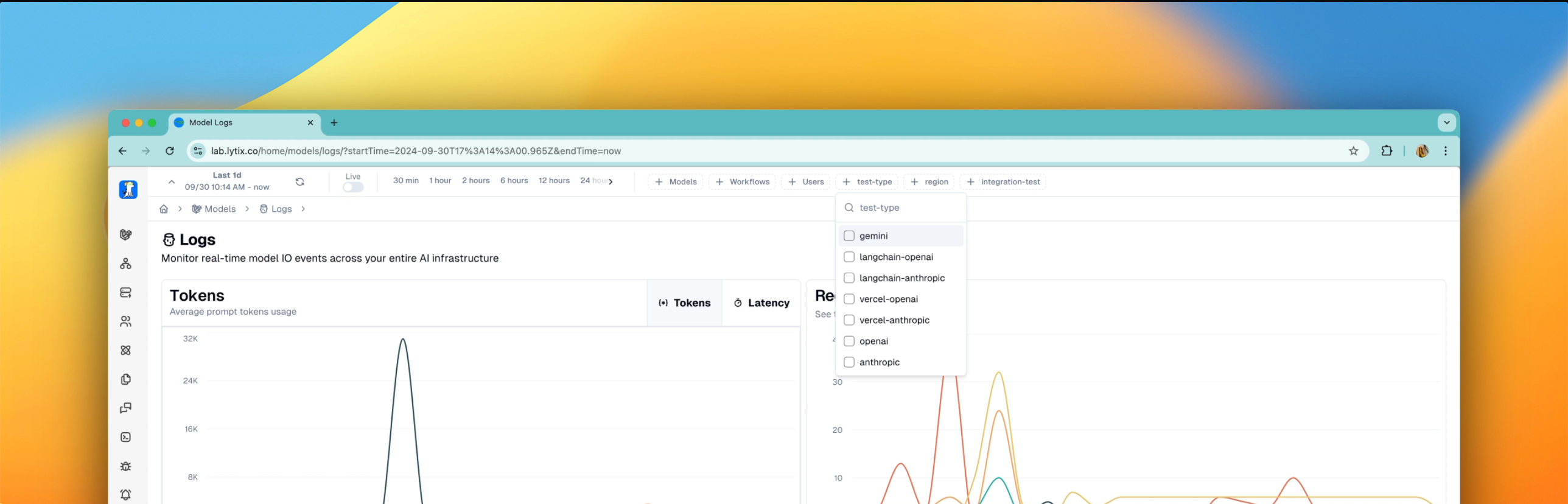

The first question I had is how often can I run this test? I'd like to run it every minute, but that might be cost prohibitive. Thankfully with lytix we can specifically see the cost for running all integrations tests:

Now I can further subdivide to see if there a specific type of tests thats costing me more (e.g. our Langchain test vs our Gemini test)

I can also see the difference between our EU servers vs. our US servers.

🧱 Features That Make This Possible

To achieve this breakdown these are the following lytix features I had to use:

Workflows

In order to isolate my integration test logs from every other log, I first started by grouping all of them together under a single workflow:

...

const response = await client.chat.completions.create({

...,

{

headers: {

workflowName: 'integration-test',

}

}

})This allows me to easily filter out all logs that are unrelated, and only focus on the logs for a single workflow. Furthermore it allows me to slice cost by workflow.

📓 Check out the Workflows documentation here (opens in a new tab)

Metadata

In order to further breakdown the data, I can isolate by region and specific test. I achieved this by sending metadata along with the request.

...

const response = await client.chat.completions.create({

...,

{

headers: {

[`lytix-metadata-key-${metadataKey}`]: 'openai',

[`lytix-metadata-key-region`]: LX_REGION ?? 'us-east-1'

}

}

})This allows me to, within my workflow, further subdivide the data.

📓 Check out the Metdata documentation here (opens in a new tab)

👨💻

📣 If you are building with AI/LLMs, please check out our project lytix (opens in a new tab). It's a observability + evaluation platform for all the things that happen in your LLM.

📖 If you liked this, sign up for our newsletter below. You can check out our other posts here (opens in a new tab).